Google implements NEW Category Search through Upgraded Google Images

PREVIOUSLY PUBLISHED ON EXAMINER

Face recognition, Goggles, and now… A NEW and improved Image Search by Google.

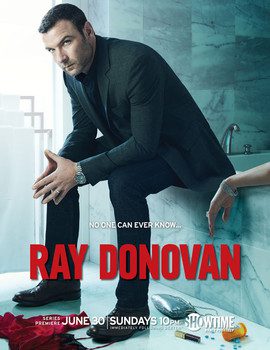

If you’re constantly using the World’s Leading Search Engine, Google, you probably have noticed something different about Google Images in the last few days based upon your inputted search terms. Personally, my first experience with the new feature implemented by Google’s Image Search took place when trying to find graphics from an upcoming TV Series that hits Showtime on June 30. That show is Ray Donovan; and I was working on a graphic for Kwame Patterson (known for his role as “Monk” on The Wire), who appears on the series as Rapper/Producer, Re-Kon.

Upon noticing categories for Google’s Image Search of “Ray Donovan” and seeing groupings such as “Cast,” “Set,” and “Series,” I thought to myself that this was very “cool” and wondered how could I do it myself. So, I went searching for information on how to do it. After all, my profession IS Internet Marketing.

We are ashamed of ourselves if we don’t know about something related to our field. And, I just HAD to know more! Unfortunately, there was NO INFORMATION to be found. And, it continued plaguing me as I searched over and over again within Google and tested other search terms to learn what allows this grouping to occur.

So, I figured it out. Alt tags and page context would group these elements in subcategories on Google once the Spyder crawls the pages and terms in mention. But, just like Veruca Salt, “I want it now!” So, I continued to search for information. Then, today (June 12, 2013), Google released some great information via their blog on BlogSpot.

Apparently, last month at Google I/O, they showed “a major upgrade to the photos experience.” Google has caught on that not everyone cares to name their content (one way that Internet Marketing becomes a success), and has come up with a new way to search photos with visual recognition.

If you’re like me, you take many photos and don’t remember which folder you put them in, because you didn’t need them at the time. Now, you will be able to find them much easier… especially if you take advantage of Google Photos, which has recently allotted for 15GB of space per Google user.

In previous years, search rank took place via alt text, page descriptions and content naming. In the past year, we saw Google implement color search technology. You were able to search images by color. Google makes a joke that “the average toddler is better at understanding what is in a photo than the world’s most powerful computers running state of the art algorithms.” And, laughably, that is often true!

They go on to inform us: “This past October the state of the art seemed to move things a bit closer to toddler performance. A system which used deep learning and convolutional neural networks easily beat out more traditional approaches in the ImageNet computer vision competition designed to test image understanding. The winning team was from Professor Geoffrey Hinton’s group at the University of Toronto.

“We built and trained models similar to those from the winning team using software infrastructure for training large-scale neural networks developed at Google in a group started by Jeff Dean and Andrew Ng. When we evaluated these models, we were impressed; on our test set we saw double the average precision when compared to other approaches we had tried.

“We knew we had found what we needed to make photo searching easier for people using Google. We acquired the rights to the technology and went full speed ahead adapting it to run at large scale on Google’s computers. We took cutting edge research straight out of an academic research lab and launched it, in just a little over six months. You can try it out at photos.google.com.”

Throughout experiment and implementation, Google has become aware of a few mistakes the computer will make, such as “mistaking a banana slug for a snake” or a “photo of a donkey for a dog.” Of course, not much information other than recognition of visual elements were able to be broken down in those situations.

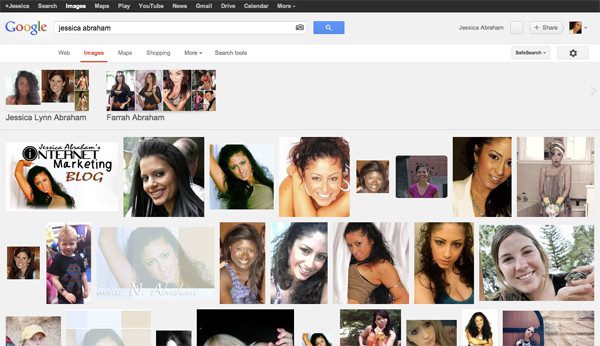

As a matter of fact, when I searched my own name, Google was still in the process of grouping “Jessica Abraham”s, and I wanted to understand this more. One category was appearing with the controversial “Farrah Abraham,” so I searched her separately (I didn’t want to contribute to her ranking in MY category! hahaha!).

When I searched “Farrah Abraham,” I got some pretty in-depth searches in detailed categories! There were her modeling pictures. I saw a category for the fight with a Kardashian. And, well… Let’s just say that it definitely categorized this search term. This is actually the search that allowed me to understand the new feature on my own (minus the visual categorization). I was able to look into the page source to try to elaborate upon my understanding of it.

To recap, Google is basically using all current technologies and implementing improved technologies to map out and categorize images on Google. It’s like Google has become a very powerful Artificial Intelligence source.

I’m sure implementing Google into a robot will be the next phase in their journey. As Sci-Fi as that sounds, it would definitely make sense in emulating those films that warn us of Robot Apocalypses. I wonder if that is why the tornados are currently sweeping all over the country at this very moment (bad Apocalyptic joke).

Wow! Can you imagine the stuff you would find searching yourself from here on out? Or, what about your new boyfriend/girlfriend who finds your photos amongst that of one of your most humiliating exes?! Regardless, you have just made my life easier, harder and more interesting, Google! I applaud you!